What might interest you? In this age, it’s safe to assume that you have given at least some digital declaration regarding what

definitely interests you that is being used to power an interest feed. Like any good detective, we are in the business of following these clues to reveal yet undiscovered conclusions. Posts, topics, pictures, videos, people, and other assorted data that will

likely interest you. Fortunately unlike detective work, digital sleuthing can be undertaken as a hard science, where algorithms and numbers are our forensic tools, culminating in an interest feed.

Neo4j Interest Feed Using Cypher

Finding commonalities among our digital footprints can be an intense task. In this example, we’ll use simple posts as our data type, with hashtags being the connecting clues. Let’s look at the user Adam Rainn in this graph. He’s made four posts, each with a different hashtag. He’s also following four famous users: Selah Britty, Brock Starr, Athena Leet, and Seamus Aktor. It’s only natural that posts from them should appear in Adam’s interest feed, especially those with similar hashtags. These can be found easily using this query:

1

|

MATCH (u:User {userId: "u1"})-[:FOLLOWS]-(v) WITH u, v MATCH (u)-[:POST]->()-[:HASHTAG]->()<-[:HASHTAG]-(p)<-[:POST]-(v) RETURN p;

|

This would get us some posts that we’re looking for, but this case is a bit trivial. We can sort through these much better if we weigh the posts according to how many hashtags they have in common:

1

|

MATCH (u:User {userId: "u1"})-[:FOLLOWS]-(v) WITH u, v MATCH (u)-[:FOLLOWS]-(v) WITH u, v MATCH (u)-[:POST]->()-[r:HASHTAG]->(h) WITH u, v, h, COUNT(r) AS cr MATCH (v)-[:POST]->(p)-[s:HASHTAG]->(h) WITH p, cr, COUNT(s) AS cs RETURN p ORDER BY cr * cs DESC;

|

But what good is an interest feed if it only tells us that we like things from people we’ve already declared we like? Let’s see what other discoveries we can make. You’ll notice a sixth user in this graph, Yun Nowne. Apparently, he’s made several posts with hashtags that Adam’s been using, and he even follows the same celebrities. How well can Cypher detect such users and reveal them to Adam?

1

|

MATCH (u:User {userId: "u1"})-[:POST]->()-[r:HASHTAG]->(h) WITH u, h, COUNT(r) AS cr MATCH (h)<-[s:HASHTAG]-(p)<-[:POST]-(v) WHERE u <> v WITH p, cr, COUNT(s) AS cs RETURN p ORDER BY cr * cs DESC;

|

The third and fifth post on this list come from Yun. And there’s no reason to stop here. As always, more data can yield more accurate (and more meaningful) predictions. In a denser graph, we could include the weight of the users we’re following based on how many of their posts we’ve liked, or how frequently our

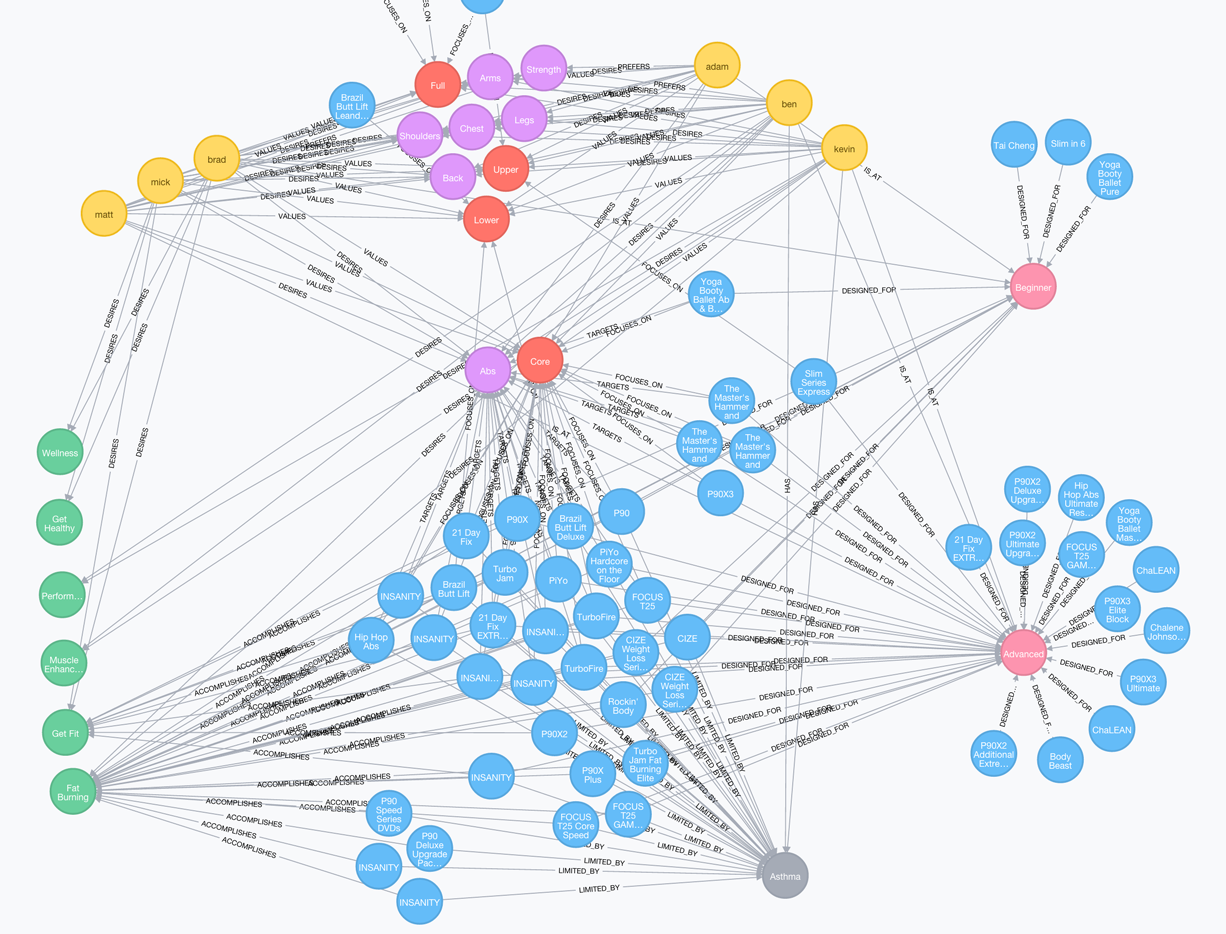

A knowledge graph as it relates to individual organizations is a unification of information across that organization enriched with contextual and semantic relevance. Introducing a knowledge graph

A knowledge graph as it relates to individual organizations is a unification of information across that organization enriched with contextual and semantic relevance. Introducing a knowledge graph